Introduction

Cache Memory stands as a silent yet vital contributor to system performance. While it might not be as commonly recognized as RAM or hard drives, cache memory plays a fundamental role in the smooth operation of digital devices.

Cache memory, also called cache, supplementary memory system that temporarily stores frequently used instructions and data for quicker processing by the central processing unit (CPU) of a computer.

The cache augments, and is an extension of, a computer’s main memory. Both main memory and cache are internal random-access memories (RAMs) that use semiconductor-based transistor circuits. Cache holds a copy of only the most frequently used information or program codes stored in the main memory.

The smaller capacity of the cache reduces the time required to locate data within it and provide it to the CPU for processing. When a computer’s CPU accesses its internal memory, it first checks to see if the information it needs is stored in the cache. If it is, the cache returns the data to the CPU.

If the information is not in the cache, the CPU retrieves it from the main memory. Disk cache memory operates similarly, but the cache is used to hold data that have recently been written on, or retrieved from, a magnetic disk or other external storage device.

In this exploration, we will x-ray the world of cache memory, considering its types, functionalities, and its role in modern computers.

What is Cache Memory?

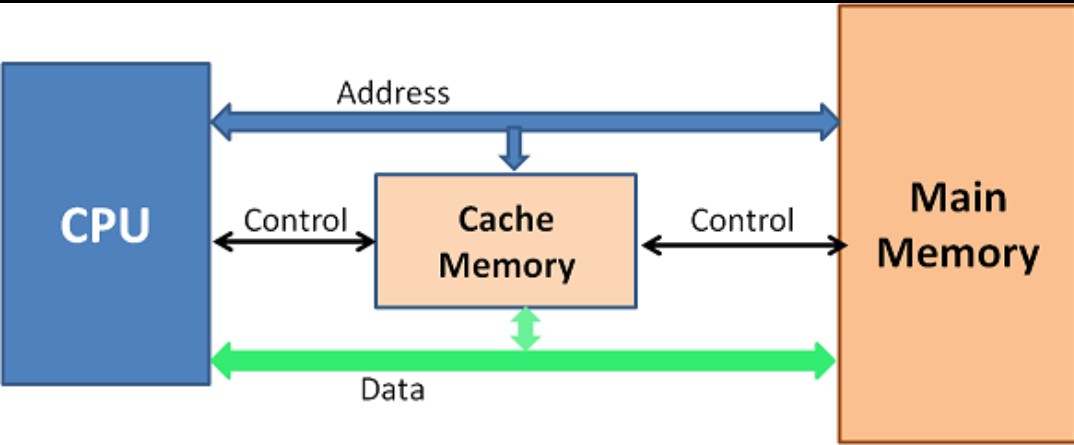

Cache memory is a small, high-speed, and volatile storage element situated between the CPU (Central Processing Unit) and the main memory (RAM) in a computer system.

Its primary function is to store frequently used data and instructions, enabling the CPU to access them without the delays of fetching them from the main memory.

Characteristics of Cache Memory

1. High Speed: Cache memory is meticulously designed for speed, operating at a pace closely aligned with the CPU, thereby minimizing data access latency.

2. Volatile Nature: Cache memory is inherently volatile; it loses its contents when the computer is powered off. This design prioritizes speed over data persistence.

3. Limited Capacity: Cache memory, relative to main memory, is relatively small. However, its constrained capacity is offset by its speed and intelligent management of frequently accessed data.

Types of Cache Memory

Cache memory encompasses various types, each serving distinct purposes within a computer system’s memory hierarchy. The two primary types are:

1. L1 Cache (Level 1 Cache): This cache, the smallest yet swiftest, is usually integrated into the CPU itself. It stores a subset of data and instructions currently in use by the CPU.

2. L2 Cache (Level 2 Cache): Larger than L1, L2 cache can either reside on the CPU chip or as a separate chip on the motherboard. It stores additional data and instructions required by the CPU.

These caches collaborate with the main memory (RAM), creating a multi-tiered memory hierarchy that optimizes data retrieval for the CPU.

3. Level 3 Cache: Level 3 cache memory, sometimes referred to as last-level cache (LLC), is located outside of the CPU but still in close proximity. It’s much larger than the L1 and L2 cache but is a bit slower.

Another difference is that L1 and L2 cache memories are exclusive to their processor core and cannot be shared. L3, on the other hand, is available to all cores. This allows it to play an important role in data sharing and inter-core communications and, in some cases depending on the design, with the cache of the graphics processing unit (GPU).

As for size, L3 cache on modern devices tend to range between 10MB and 64 MB per core or depending on the device’s specifications.

The Role of Cache Memory

Cache memory acts as a vital bridge between the high-speed CPU and the relatively slower main memory. When the CPU requires data or instructions to execute a task, it first checks the cache memory. If the needed information is present (a cache hit), the CPU can access it almost instantly. Conversely, if the data isn’t in the cache (a cache miss), the CPU must retrieve it from the main memory, which consumes significantly more time.

Cache memory is sometimes called CPU (central processing unit) memory because it is typically integrated directly into the CPU chip or placed on a separate chip that has a separate bus interconnect with the CPU. Therefore, it is more accessible to the processor, and able to increase efficiency, because it’s physically close to the processor.

In order to be close to the processor, cache memory needs to be much smaller than main memory. Consequently, it has less storage space. It is also more expensive than main memory, as it is a more complex chip that yields higher performance.

Cache Performance and Benefits

Cache memory’s significance in computer performance cannot be overstated, offering a slew of critical advantages:

1. Faster Data Access: Cache memory dramatically reduces CPU wait times for data, leading to swifter task execution.

2. Enhanced Responsiveness: Everyday computing tasks, such as web browsing and opening applications, benefit from cache memory’s storage of frequently accessed data.

3. Lower Power Consumption: Cache memory minimizes the need for power-hungry main memory access, contributing to energy-efficient computing, especially in portable devices.

4. Superior Multitasking: Cache memory facilitates seamless multitasking by ensuring readily available frequently used data.

5. Reduced Heat Generation: Speedier data retrieval permits the CPU to operate at lower power levels, curbing heat generation and diminishing the need for elaborate cooling systems.

Cache memory mapping

Caching configurations continue to evolve, but cache memory traditionally works under three different configurations:

- Direct mapped cache has each block mapped to exactly one cache memory location. Conceptually, a direct mapped cache is like rows in a table with three columns: the cache block that contains the actual data fetched and stored, a tag with all or part of the address of the data that was fetched, and a flag bit that shows the presence in the row entry of a valid bit of data.

- Fully associative cache mapping is similar to direct mapping in structure but allows a memory block to be mapped to any cache location rather than to a prespecified cache memory location as is the case with direct mapping.

- Set associative cache mapping can be viewed as a compromise between direct mapping and fully associative mapping in which each block is mapped to a subset of cache locations. It is sometimes called N-way set associative mapping, which provides for a location in main memory to be cached to any of “N” locations in the L1 cache.

Application of Cache Memory

Cache memory finds application in diverse computing scenarios:

- CPU Cache: Widely used as CPU cache, it expedites data access for the processor.

- Web Browsing: Web browsers employ cache memory to store web page elements, augmenting the loading speed of frequently visited websites.

- Database Systems: Cache memory is instrumental in database management systems, housing frequently accessed database entries and slashing query response times.

- Gaming Consoles: Cache memory is vital in gaming consoles, storing game data to ensure smooth gameplay and reduced load times.

Advantages and Disadvantages

Advantages of Cache Memory:

- Speed: Cache memory offers rapid data access, heightening overall system performance.

- Efficiency: It mitigates CPU idle time, rendering computing tasks more efficient.

- Energy Efficiency: Cache memory minimizes the need for energy-consuming main memory access.

Disadvantages of Cache Memory:

- Limited Capacity: Cache memory’s limited space may lead to data eviction when new data needs caching.

- Complex Management: Effective cache management strategies are required for optimal utilization.

Data writing policies

Data can be written to memory using a variety of techniques, but the two main ones involving cache memory are:

- Write-through. Data is written to both the cache and main memory at the same time.

- Write-back. Data is only written to the cache initially. Data may then be written to main memory, but this does not need to happen and does not inhibit the interaction from taking place.

The way data is written to the cache impacts data consistency and efficiency. For example, when using write-through, more writing needs to happen, which causes latency upfront. When using write-back, operations may be more efficient, but data may not be consistent between the main and cache memories.

Read Also: Cache Memory in 21st century Computers

One way a computer determines data consistency is by examining the dirty bit in memory. The dirty bit is an extra bit included in memory blocks that indicates whether the information has been modified. If data reaches the processor’s register file with an active dirty bit, it means that it is not up to date and there are more recent versions elsewhere. This scenario is more likely to happen in a write-back scenario, because the data is written to the two storage areas asynchronously.

Cache Memory, Virtual Memory and Main Memory: A Comprehensive Comparison

In the world of computer architecture and memory management, Cache Memory, Virtual Memory, and Main Memory (often referred to as RAM) are three fundamental components that play distinct yet interconnected roles. In this in-depth comparison, we’ll explore the characteristics, functions, and interactions of these essential memory types.

Cache Memory

1. Location and Size: Cache memory is a small, high-speed memory unit located closest to the Central Processing Unit (CPU). It is typically divided into multiple levels, with L1 cache being the smallest and fastest and L3 cache being larger and slower.

2. Purpose: The primary purpose of cache memory is to store frequently accessed data and instructions, reducing the CPU’s access time to these critical resources. It acts as a buffer between the CPU and the slower main memory (RAM).

3. Access Time: Cache memory offers extremely low latency, providing rapid access to data. When the CPU requests data, it first checks the cache. If the data is found (cache hit), it is retrieved quickly. Otherwise, it results in a cache miss, requiring data retrieval from main memory.

4. Management: Cache memory is typically managed by hardware and operates on a principle called cache coherence. Data is automatically fetched from main memory to the cache when needed, and cache lines are replaced using specific algorithms.

5. Size and Cost: Due to its high-speed nature and proximity to the CPU, cache memory is small in size and relatively expensive per unit of storage.

Virtual Memory

1. Location and Size: Virtual memory is an abstraction created by the operating system, allowing programs to use more memory than physically available. It resides on secondary storage devices such as hard drives or solid-state drives (SSDs) and has a much larger capacity compared to cache memory or main memory.

2. Purpose: Virtual memory provides a layer of indirection, allowing programs to access memory addresses that may not be present in physical RAM. It enables multitasking and efficient memory management by swapping data in and out of RAM as needed.

3. Access Time: Accessing data in virtual memory is significantly slower than accessing data in cache memory or main memory. This is because data must be retrieved from secondary storage devices, which have higher latency.

4. Management: Virtual memory is managed by the operating system, which uses techniques like paging or segmentation to map virtual addresses to physical addresses. When physical memory becomes scarce, pages are swapped between RAM and secondary storage.

5. Size and Cost: Virtual memory can be much larger in size compared to both cache and main memory. However, it is slower and cheaper per unit of storage due to its reliance on secondary storage devices.

Main Memory (RAM)

1. Location and Size: Main memory, or Random Access Memory (RAM), is a medium-speed memory unit located between cache memory and secondary storage devices. Its size varies but is significantly larger than cache memory.

2. Purpose: RAM stores the data and instructions that the CPU is actively using. It serves as a bridge between the high-speed cache memory and the much slower secondary storage devices. RAM is volatile, meaning its contents are lost when the computer is powered off.

3. Access Time: RAM offers lower latency compared to virtual memory but is slower than cache memory. Access times are measured in nanoseconds, making it significantly faster than accessing data from secondary storage.

4. Management: RAM is managed by both hardware and the operating system. It is responsible for storing the active processes, including the operating system itself and currently running applications.

5. Size and Cost: RAM size varies from computer to computer and can be upgraded. It is more expensive than secondary storage but more affordable than cache memory.

Locality and Cache Performance

Understanding the concept of locality is essential to appreciate how cache memory enhances CPU performance. Locality refers to the tendency of programs to access specific data or instructions repeatedly or data that is stored close to each other in memory.

There are two main types of locality:

1. Temporal Locality: This occurs when a program accesses the same data or instructions repeatedly over a short period. Cache memory exploits temporal locality by storing recently accessed data for quick retrieval.

2. Spatial Locality: Spatial locality involves accessing data that is stored near other recently accessed data. Cache memory benefits from spatial locality by loading entire cache lines containing nearby data.

Specialization and functionality

In addition to instruction and data caches, other caches are designed to provide specialized system functions. According to some definitions, the L3 cache’s shared design makes it a specialized cache. Other definitions keep the instruction cache and the data cache separate and refer to each as a specialized cache.

Translation lookaside buffers (TLBs) are also specialized memory caches whose function is to record virtual address to physical address translations.

Still other caches are not, technically speaking, memory caches at all. Disk caches, for instance, can use DRAM or flash memory to provide data caching similar to what memory caches do with CPU instructions. If data is frequently accessed from the disk, it is cached into DRAM or flash-based silicon storage technology for faster access time and response.

Specialized caches are also available for applications such as web browsers, databases, network address binding and client-side Network File System protocol support. These types of caches might be distributed across multiple networked hosts to provide greater scalability or performance to an application that uses them.

3 Examples of How Cache Memory is Used

The use of cache memory isn’t only essential for on-premises and personal devices. It’s also used heavily in data center servers and in cloud computing offerings. Here are a few examples highlighting cache memory solutions.

Beat

Beat is a ride-hailing application based in Greece with over 700,000 drivers and 22 million active users globally. Founded in 2011, it’s now the fastest-growing app in Latin America, mainly in Argentina, Chile, Colombia, Peru, and Mexico.

During its hypergrowth period, Beat’s application started experiencing outages due to bottlenecks in the data delivery system. It had already been working with AWS and using the Amazon ElastiCache, but an upgrade in configuration was desperately needed.

“We could split the traffic among as many instances as we liked, basically scaling horizontally, which we couldn’t do in the previous solution,” said Antonis Zissimos, senior engineering manager at Beat. “Migrating to the newer cluster mode and using newer standard Redis libraries enabled us to meet our scaling needs and reduce the number of operations our engineers had to do.”

In less than two weeks, Beat was able to reset ElastiCache and reduce load per node by 25–30%, cut computing costs by 90%, and eliminate 90% of staff time spent on the caching layer.

Sanity

Sanity is a software service and a platform that helps developers better integrate content by treating it as structured data. With headquarters in the U.S. and Norway, its fast and flexible open-source editing environment, Sanity Studio, supports a fully customizable user interface (UI) and live-hosted data storage.

In order to support the real-time and fast delivery of content to the developers using its platform, Sanity needed to optimize its distribution. Working with Google Cloud, it turned to Cloud CDN to cache content in various servers located close to major end-user internet service providers (ISPs) globally.

“In the two years we’ve been using Google Kubernetes Engine, it has saved us plenty of headaches around scalability,” said Simen Svale Skogsrud, co-founder and CTO of Sanity. “It helps us to scale our operations to support our global users while handling issues that would otherwise wake us up at 3 a.m.”

Sanity is now able to process five times the traffic using Google Kubernetes Engine and support the accelerated distribution of data through an edge-cached infrastructure around the globe.

SitePro

SitePro is an automation software solution that offers real-time data capture at the source through an innovative end-to-end approach for the oil and gas industries. Based in the U.S., it’s an entirely internet-based solution that at one point controlled nearly half of the richest oil-producing area in the country.

To maintain control over the delicate operation, SitePro sought the help of Microsoft Azure, employing Azure Cache for Redis, and managed to boost its operations. Now, SitePro is in a position to start investing in green tech and more environmentally-friendly services and products.

“Azure Cache for Redis was the only thing that had the throughput we needed,” said Aaron Phillips, co-CEO at SitePro. “Between Azure Cosmos DB and Azure Cache for Redis, we’ve never had congestion. They scale like crazy.

“The scalability of Azure Cache for Redis played a major role in the speed at which we have been able to cross over into other industries.”

Thanks to Azure, SitePro was able to simplify its architecture and significantly improve data quality. It has now eliminated the gap in timing at no increase in costs.

Conclusion

In the fast-paced realm of technology, where time equates to performance, cache memory emerges as an unassuming yet indispensable ally. Its proficiency in storing frequently accessed data and instructions translates into minimized wait times, culminating in seamless computing experiences.

Cache memory catalyzes software development, elevates web browsing, and underpins countless applications across the digital landscape. Embrace this uncelebrated hero, optimizing its capabilities with a keen eye on cache memory mapping and tailored cache strategies.